[cB] - Performance Faceoff: Spring Boot WebFlux vs. Express.js IO – Unleashing the Speed Demons

Dive into Spring Boot WebFlux, Netty, and Express.js for a glimpse into the dynamic realms of modern web development.

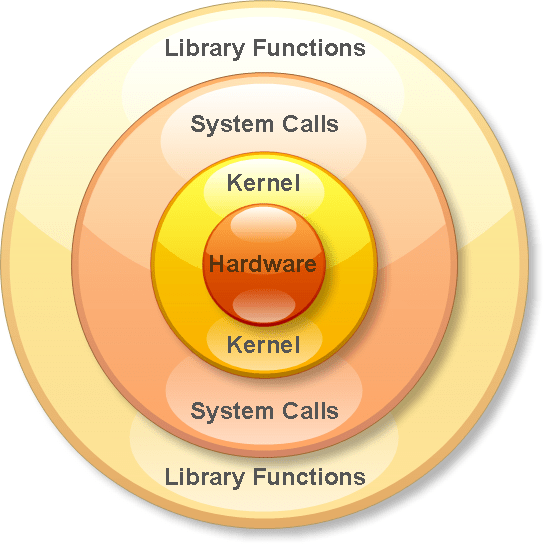

1. The cost of Syscalls

1.1 Syscalls in Express.js

Express.js, built on top of Node.js, follows an event-driven, non-blocking architecture that leverages the V8 JavaScript engine. While this approach brings benefits like high concurrency and scalability, it also involves interactions with the underlying operating system through syscalls, particularly for I/O operations.

Context Switching Overhead

In an event-driven model like Node.js, multiple asynchronous operations can be handled concurrently without blocking the execution of other tasks. However, when an asynchronous operation, such as reading from or writing to a file, involves a syscall, a context switch is required. Context switching refers to the process of saving and restoring the state of a CPU so that a different task can be executed. The overhead associated with frequent context switching can impact performance, especially in scenarios with high-frequency I/O operations.

Syscall Execution Overhead

Syscalls in Node.js are essential for tasks such as file I/O, network operations, and other interactions with the operating system. While the non-blocking nature of Node.js allows the application to continue processing other tasks during I/O operations, there is still an overhead associated with initiating and handling syscalls. The cost of syscall execution becomes more noticeable in situations where a large number of I/O operations are performed frequently.

1.2 Syscalls in Spring Boot WebFlux

Spring Boot WebFlux adopts a reactive programming model and is often used with the Netty server, which is known for its event-driven architecture. This combination minimizes the reliance on traditional syscalls, offering improved efficiency in handling I/O operations.

Reactive Programming and the Reactor Pattern

WebFlux embraces reactive programming principles, which include the use of the reactor pattern. In the reactor pattern, operations are expressed as asynchronous sequences of events, and the underlying framework efficiently manages these events. This approach allows WebFlux to handle I/O operations without the need for dedicated threads per connection, reducing the reliance on syscalls.

Netty's Event-Driven Model

When Spring Boot WebFlux is used with the Netty server, the event-driven model of Netty further contributes to minimizing syscalls. Netty efficiently manages I/O operations through event loops, where tasks are executed asynchronously. This model eliminates the need for excessive context switching and enhances the overall responsiveness and scalability of WebFlux applications, especially in scenarios with high levels of concurrent connections.

2. Epoll Benefits in Spring Boot WebFlux

2.1 The Epoll Advantage

Scalable I/O Event Notification: One of the key advantages of Spring Boot WebFlux is its use of Netty, which in turn utilizes the Epoll mechanism on Linux systems. Epoll is a scalable I/O event notification mechanism that allows efficient handling of a large number of connections simultaneously. This is particularly beneficial in scenarios where the application needs to serve a massive number of clients concurrently, such as in high-throughput applications or microservices handling numerous requests.

Non-Blocking, Asynchronous Operations

Epoll facilitates non-blocking, asynchronous I/O operations by efficiently managing events on file descriptors. This means that WebFlux can handle I/O operations without blocking the execution of other tasks, contributing to the framework's overall responsiveness and ability to scale under heavy loads.

Resource Efficiency

Epoll optimizes resource usage by minimizing the overhead associated with managing large numbers of connections. It achieves this by efficiently monitoring and handling I/O events, reducing the need for excessive context switching and improving overall resource utilization.

2.2 Express.js and Epoll

Libuv vs. Epoll

Express.js, being built on Node.js, relies on the libuv library for asynchronous I/O operations. While libuv is designed for cross-platform compatibility, it lacks the platform-specific optimizations found in Epoll, which is specific to Linux systems. This distinction can lead to differences in scalability and efficiency, especially in scenarios where Epoll's features are leveraged to handle a large number of concurrent connections.

Platform-Specific Considerations

Epoll is particularly advantageous on Linux systems, where it is native, and its features are well-suited for high-performance networking. Express.js may not fully benefit from Epoll's optimizations due to its cross-platform nature. This platform-specific advantage highlights the importance of selecting the right technology stack based on the requirements and characteristics of the target deployment environment.

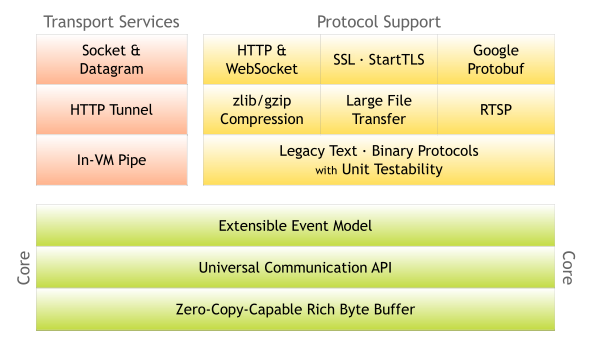

3. Netty's Event-Driven Model

3.1 Event-Driven Architecture

Efficient Handling of Events: Netty's event-driven architecture is designed to efficiently handle I/O events without the need for dedicated threads per connection. In a traditional synchronous model, each connection might require its own thread, leading to resource inefficiency and increased overhead due to context switching. However, in an event-driven model, the focus is on reacting to events, such as incoming data or completed I/O operations, without blocking threads.

Non-Blocking I/O Operations

In an event-driven architecture, I/O operations are non-blocking, meaning that a thread doesn't wait idly for an operation to complete. Instead, when an event occurs, a specific action is triggered. This approach allows a small number of threads to handle a large number of connections concurrently, as each thread can quickly respond to events without waiting for ongoing operations to finish. This non-blocking nature aligns well with the reactive programming paradigm, promoting responsiveness and scalability in WebFlux applications.

Seamless Integration with Reactive Programming

The event-driven architecture of Netty seamlessly integrates with the reactive programming paradigm embraced by Spring Boot WebFlux. Reactive programming involves working with asynchronous streams of data and responding to changes, which aligns perfectly with the event-driven model. This integration enables developers to build applications that are highly responsive to input events, making efficient use of system resources.

3.2 Buffer Pooling

Optimizing Memory Usage

Netty's buffer pooling mechanism is a crucial optimization for managing memory efficiently. Instead of allocating a new memory buffer for each I/O operation and deallocating it afterward, Netty reuses existing buffers. This reuse minimizes the overhead associated with memory allocation and deallocation, as well as reduces the risk of memory fragmentation.

Reducing Garbage Collection Overhead

Frequent memory allocation and deallocation can lead to increased garbage collection overhead, impacting the overall performance of an application. By pooling buffers and reusing them, Netty helps to mitigate the frequency of garbage collection events, contributing to more consistent and predictable performance.

Enhancing Data Transfer Efficiency

Buffer pooling not only reduces memory-related overhead but also enhances the efficiency of data transfer. Reusing buffers allows Netty to optimize data movement within the application, reducing the need to copy data between buffers. This contributes to faster data transfer rates and lower CPU utilization, particularly beneficial in scenarios where there are frequent data exchanges, such as in streaming or real-time communication applications.

3.3 Backpressure Support

Flow Control and Resilience

Netty incorporates support for backpressure, a crucial feature in reactive systems. Backpressure allows the system to signal slower downstream components to reduce the rate of data flow, preventing overwhelming faster upstream components. This flow control mechanism contributes to the resilience of WebFlux applications by avoiding situations where the system becomes overloaded with more data than it can handle. Netty's built-in support for backpressure aligns with the principles of reactive programming adopted by WebFlux.

In summary, Netty's event-driven architecture, combined with efficient thread management, adaptive thread pools, and support for backpressure, contributes to the high-performance characteristics of Spring Boot WebFlux applications. These features collectively enable WebFlux to handle a large number of concurrent connections while efficiently managing system resources, making it well-suited for building responsive and scalable applications.

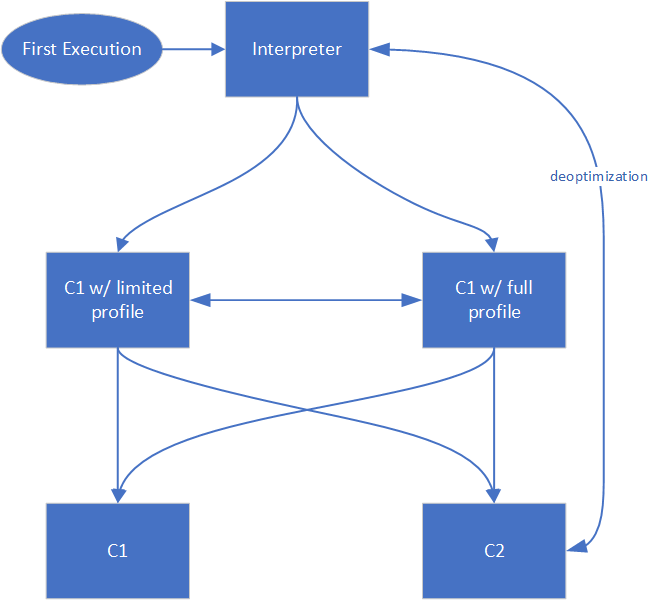

4. JIT Optimizations

4.1 Just-In-Time Compilation in Spring Boot WebFlux

Dynamic Compilation Process: Just-In-Time compilation is a process where Java bytecode is compiled into native machine code at runtime by the Java Virtual Machine (JVM). Unlike Ahead-Of-Time (AOT) compilation, which occurs before execution, JIT compilation allows the JVM to adaptively optimize code during the application's runtime. This dynamic compilation process is particularly beneficial for WebFlux applications built on the Netty framework.

JVM C2 Compiler

The JVM employs a two-tier compilation strategy. The C2 compiler, also known as the HotSpot Server Compiler, performs high-level optimizations on frequently executed code paths. This includes inlining, loop unrolling, and other sophisticated optimizations. WebFlux applications, using Netty and the reactive programming model, can benefit from these optimizations as the JVM analyzes and dynamically optimizes the code based on the actual execution patterns.

Adaptive Optimization

JIT compilation is adaptive, meaning it monitors the application's runtime behavior and adjusts the level of optimization accordingly. Code paths that are executed more frequently are subject to more aggressive optimizations. This adaptability allows the JVM to tailor optimizations to the specific characteristics of the WebFlux application as it runs, improving overall performance.

Profiling and Deoptimization

JIT compilation involves profiling the application's execution to identify hotspots – portions of code that are frequently executed. If the assumptions made during optimization are invalidated, the JVM can deoptimize the code, allowing it to revert to a less optimized form. This flexibility ensures that the JIT compiler adapts to changes in runtime behavior and continues to deliver optimal performance.

4.2 V8 Optimization in Express.js

V8 JavaScript Engine

Express.js, being a Node.js framework, relies on the V8 JavaScript engine for executing server-side JavaScript code. V8 is known for its aggressive optimizations to enhance the speed of JavaScript execution. The engine includes a JIT compiler that dynamically compiles JavaScript source code into native machine code during runtime.

Inline Caching and Hidden Classes

V8 employs techniques like inline caching and hidden classes to optimize the execution of JavaScript code. Inline caching reduces the overhead of property access by dynamically optimizing property access based on runtime types. Hidden classes improve memory access patterns, enhancing the overall performance of JavaScript code in Express.js applications.

Optimizing Hot Functions

Similar to the JVM's JIT compilation, V8 optimizes hot functions based on their execution frequency. The engine uses heuristics to identify frequently executed code paths and applies aggressive optimizations to those areas. This adaptability allows V8 to dynamically adjust its optimization strategy to the evolving behavior of the Express.js application.

Garbage Collection Strategies

V8 incorporates efficient garbage collection strategies, such as generational garbage collection, to minimize pauses and improve overall responsiveness. These strategies play a crucial role in maintaining smooth execution and preventing performance degradation due to memory management.

Benchmarks

Server Specifications

- CPU: 8 Cores / 16 Threads Intel Xeon Gold 5120

- RAM: 32 GB DDR5 4800 MHz

- Network: AWS ENA 10 Gbps SR-IOV Enabled

Benchmarking Tool

Express JS

root@abc:/home/abc# wrk -t16 -c100 -d30s -R250000 http://10.160.0.9:3000/

Running 30s test @ http://10.160.0.9:3000/

16 threads and 100 connections

Thread calibration: mean lat.: 3712.516ms, rate sampling interval: 13082ms

Thread calibration: mean lat.: 3665.583ms, rate sampling interval: 12681ms

Thread calibration: mean lat.: 3843.938ms, rate sampling interval: 13041ms

Thread calibration: mean lat.: 3929.175ms, rate sampling interval: 13705ms

Thread calibration: mean lat.: 3860.993ms, rate sampling interval: 13410ms

Thread calibration: mean lat.: 3614.564ms, rate sampling interval: 13434ms

Thread calibration: mean lat.: 3997.825ms, rate sampling interval: 13615ms

Thread calibration: mean lat.: 3747.010ms, rate sampling interval: 12869ms

Thread calibration: mean lat.: 3675.774ms, rate sampling interval: 13361ms

Thread calibration: mean lat.: 3816.373ms, rate sampling interval: 13008ms

Thread calibration: mean lat.: 3623.861ms, rate sampling interval: 12697ms

Thread calibration: mean lat.: 3827.300ms, rate sampling interval: 13467ms

Thread calibration: mean lat.: 3730.038ms, rate sampling interval: 12795ms

Thread calibration: mean lat.: 3852.709ms, rate sampling interval: 13606ms

Thread calibration: mean lat.: 3889.886ms, rate sampling interval: 13197ms

Thread calibration: mean lat.: 3864.894ms, rate sampling interval: 13320ms

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.53s 4.35s 24.87s 58.95%

Req/Sec 4.20k 423.52 4.94k 56.25%

2008799 requests in 30.00s, 457.86MB read

Requests/sec: 66964.94

Transfer/sec: 15.26MB

Spring Boot WebFlux

root@abc:/home/abc# wrk -t16 -c100 -d30s -R250000 http://10.160.0.9:8080/

Running 30s test @ http://10.160.0.9:8080/

16 threads and 100 connections

Thread calibration: mean lat.: 887.759ms, rate sampling interval: 3092ms

Thread calibration: mean lat.: 1148.061ms, rate sampling interval: 4825ms

Thread calibration: mean lat.: 874.379ms, rate sampling interval: 3823ms

Thread calibration: mean lat.: 1546.654ms, rate sampling interval: 5455ms

Thread calibration: mean lat.: 825.385ms, rate sampling interval: 3325ms

Thread calibration: mean lat.: 1137.859ms, rate sampling interval: 4218ms

Thread calibration: mean lat.: 1233.996ms, rate sampling interval: 4984ms

Thread calibration: mean lat.: 1130.624ms, rate sampling interval: 5177ms

Thread calibration: mean lat.: 928.639ms, rate sampling interval: 4157ms

Thread calibration: mean lat.: 1243.508ms, rate sampling interval: 5427ms

Thread calibration: mean lat.: 1049.552ms, rate sampling interval: 4616ms

Thread calibration: mean lat.: 1094.318ms, rate sampling interval: 4065ms

Thread calibration: mean lat.: 1249.297ms, rate sampling interval: 4567ms

Thread calibration: mean lat.: 1168.444ms, rate sampling interval: 4505ms

Thread calibration: mean lat.: 1001.284ms, rate sampling interval: 3932ms

Thread calibration: mean lat.: 1572.793ms, rate sampling interval: 5591ms

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.55s 1.62s 10.37s 69.97%

Req/Sec 12.12k 743.03 13.77k 69.70%

5787947 requests in 30.00s, 502.30MB read

Requests/sec: 192946.95

Transfer/sec: 16.74MB

Conclusion

In summary, our exploration reveals the inherent performance advantages of Java-based technologies, exemplified by Spring Boot WebFlux and its utilization of the high-performing Netty framework. The Just-In-Time (JIT) compilation capabilities of the Java Virtual Machine (JVM) further enhance the speed and efficiency of WebFlux applications. This performance-centric approach, coupled with the platform's adaptability and resource efficiency, positions Java as a formidable force in crafting highly responsive and scalable web applications. In the race for optimal performance, Java emerges as a frontrunner, providing developers with a robust foundation for building cutting-edge, high-speed digital experiences.

No comments yet. Login to start a new discussion Start a new discussion